In this article, we are going to see how voice recognition can translate spoken words into text using closed captions to enable a person with hearing loss to understand what others are saying. It's most useful for students, employees, and client communications purposes rather than using voice commands instead of typing.

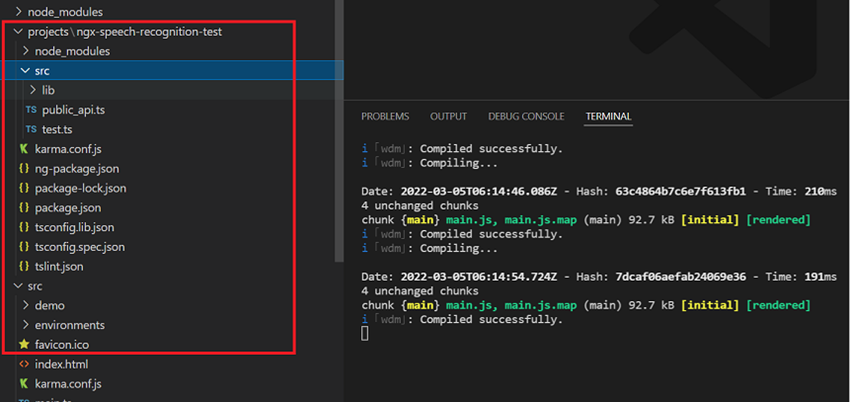

First, we have created a basic Angular application using Angular CLI.

Here I have used ngx Speech Recognition for custom plugins or we can create a separate library inside the application, and be able to use the newly created library.

Please refer to this link for angular custom library creations.

I have used Ngx Speech Recognition service library from the applications created as ngx-speech-recognition-test.

How to Create Voice Recognition using Ngx-Speech-Recognition in Angular

Then created main and sub-components. The main components are just using some project-related purpose or whatever content we need to add this main component. If you don’t need it, please ignore it. In the sub-components, we used the voice recognition part. Please check the following code.

Here I have added all sub-components codes.

Sub.component.ts

import {

Component

} from '@angular/core';

import {

ColorGrammar

} from './sub.component.grammar';

import {

SpeechRecognitionLang,

SpeechRecognitionMaxAlternatives,

SpeechRecognitionGrammars,

SpeechRecognitionService,

} from '../../../../../projects/ngx-speech-recognition-test/src/public_api';

@Component({

selector: 'test-sub',

templateUrl: './sub.component.html',

styleUrls: ['./sub.component.css'],

providers: [

// Dependency Inject to SpeechRecognitionService

// like this.

{

provide: SpeechRecognitionLang,

useValue: 'en-US',

}, {

provide: SpeechRecognitionMaxAlternatives,

useValue: 1,

}, {

provide: SpeechRecognitionGrammars,

useValue: ColorGrammar,

},

SpeechRecognitionService,

],

})

export class SubComponent {

public started = false;

public message = '';

constructor(private service: SpeechRecognitionService) {

// Dependency The injected services are displayed on the console.

// Dependence was resolved from SubComponent's provider.

this.service.onstart = (e) => {

console.log('voice start');

};

this.service.onresult = (e) => {

this.message = e.results[0].item(0).transcript;

console.log('voice message result', this.message, e);

};

}

start() {

this.started = true;

this.service.start();

}

stop() {

this.started = false;

this.service.stop();

}

}

SpeechRecognitionLang, SpeechRecognitionMaxAlternatives, SpeechRecognitionGrammars and SpeechRecognitionService all imported from ngx-speech-recognition-test.

We need to inject SpeechRecognitionServices. SpeechRecognitionLang provides mapping, we can map language code mentioned 'en-US'

SpeechRecognitionMaxAlternatives is Recognition voice count. I have marked 1.

SpeechRecognitionGrammars purpose checked to translate text for grammar validations.

ColorGrammar(Sub.component.grammar.ts). All the setup configurations are in the above codes.

Sub.component.grammar.ts

Sub.component.grammar.ts

/**

* @see https://developer.mozilla.org/ja/docs/Web/API/SpeechGrammarList#Examples

*/

export const ColorGrammar = new SpeechGrammarList();

ColorGrammar.addFromString(`#JSGF V1.0;

grammar colors;

public <color> = aqua | azure | beige | bisque |

black | blue | brown | chocolate | coral | crimson | cyan | fuchsia |

ghostwhite | gold | goldenrod | gray | green | indigo | ivory | khaki |

lavender | lime | linen | magenta | maroon | moccasin | navy | olive |

orange | orchid | peru | pink | plum | purple | red | salmon | sienna |

silver | snow | tan | teal | thistle | tomato | turquoise |

violet | white | yellow ;

`, 1);

Sub.component.html

<p>Voice message: {{message}}</p>

<button [disabled]="started" (click)="start()">Voice start</button>

<button [disabled]="!started" (click)="stop()">Voice end</button>

<p>lang: en-US</p>

Sub.module.ts

import { NgModule } from '@angular/core';

import { CommonModule } from '@angular/common';

import { SubComponent} from './components';

@NgModule({

imports: [CommonModule],

declarations: [SubComponent],

exports: [SubComponent,],

})

export class SubModule { }

Then copy and paste the codes to demo.component.ts, demo. Component.html and demo.module.ts.

demo.component.ts

import { Component } from '@angular/core';

@Component({

selector: 'demo-root',

templateUrl: './demo.component.html',

styleUrls: ['./demo.component.css'],

})

export class DemoComponent { }

demo.component.html

<test-main></test-main>

<test-sub></test-sub>

Demo.module.ts

import { BrowserModule } from '@angular/platform-browser';

import { NgModule } from '@angular/core';

import { RouterModule } from '@angular/router';

import { CommonModule } from '@angular/common';

import { DemoComponent } from './demo.component';

import { MainComponent } from './components';

import { SubModule } from '../demo/sub';

import { SpeechRecognitionModule } from '../../projects/ngx-speech-recognition-test/src/public_api';

@NgModule({

declarations: [

// app container.

DemoComponent, MainComponent,

],

imports: [

BrowserModule,

CommonModule,

RouterModule,

SpeechRecognitionModule.withConfig({

lang: 'en',

interimResults: true,

maxAlternatives: 10,

// sample handlers.

onaudiostart: (ev: Event) => console.log('onaudiostart',ev),

onsoundstart: (ev: Event) => console.log('onsoundstart',ev),

onspeechstart: (ev: Event) => console.log('onspeechstart',ev),

onspeechend: (ev: Event) => console.log('onspeechend',ev),

onsoundend: (ev: Event) => console.log('onsoundend',ev),

onaudioend: (ev: Event) => console.log('onaudioend', ev),

onresult: (ev: SpeechRecognitionEvent) => console.log('onresult',ev),

onnomatch: (ev: SpeechRecognitionEvent) => console.log('onnomatch',ev),

onerror: (ev: SpeechRecognitionError) => console.log('onerror',ev),

onstart: (ev: Event) => console.log('onstart', ev),

onend: (ev: Event) => console.log('onend',ev),

}),

// In SubModule, Component's providers are doing Demo

// using SpeechRecognitionService generated.

//

SubModule,

],

providers: [],

bootstrap: [DemoComponent]

})

export class DemoModule { }

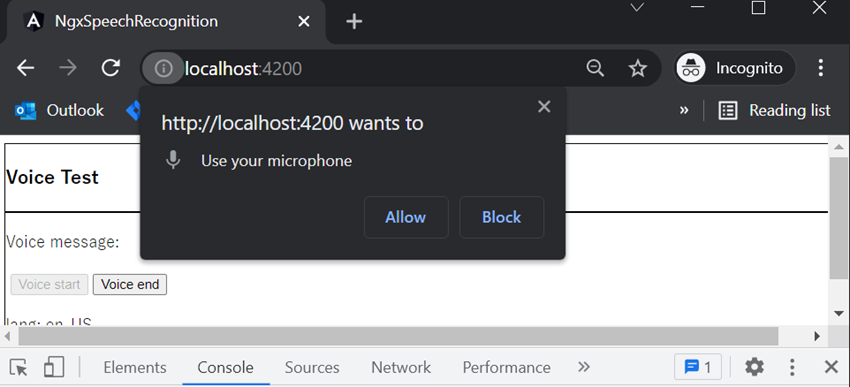

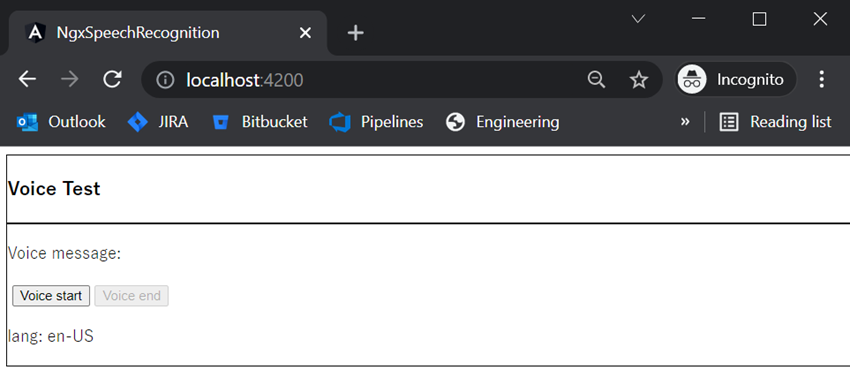

Added the above codes in the applications. Then please run the applications using ng serve --open or npm start. The application windows http://localhost:4200/ will be opened in the default browser.

If going to run the application first time and click the voice start button browser asks “Use your microphone” permission. If click allows and continues the following steps.

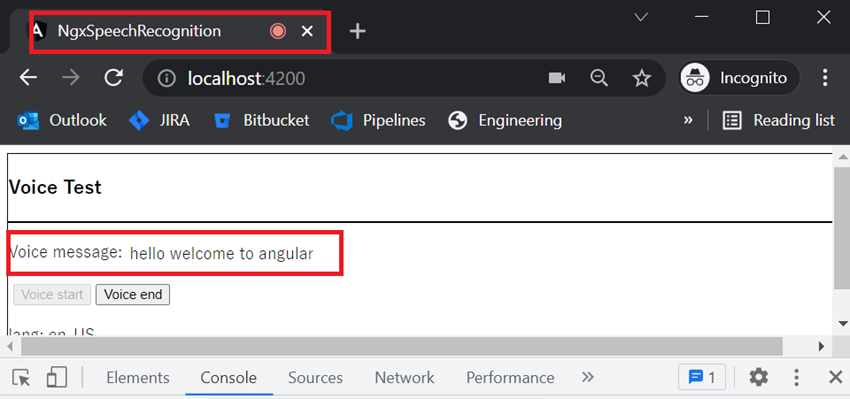

After that, I have to click the Voice start button and then speak input words like “hello welcome to angular”. The voice recognition captures them one by one and stores them into the message. Then, give another input into the voice like “I learn typescript”. And click the Voice end button. The whole words into the single lines of message like “hello welcome to angular I learn typescript”.

If you click the voice end button and we have end voice input process has complete sentence will show in the message field. I hope this article is of most helpful for you.